Peter K. Enns, Amelia Goranson, Jake Rothschild, and Gretchen Streett

The Pew Research Center recently released a report evaluating the accuracy of different types of survey samples. Verasight was not one of the survey companies used in the study. But given the Pew Research Center’s reputation for rigorous methods, we immediately wanted to know how the Verasight Community of survey takers would have performed if it was part of the study. To assess Verasight’s accuracy, we asked the Verasight panel the same questions Pew asked in their study. Verasight was more accurate than all of the nonprobability samples the Pew study examined.

Overview of the Pew Study

The Pew study considered three types of nonprobability (or opt-in) samples. The specific data vendors are anonymized, but the Pew study included a single nonprobability panel, a panel aggregator (or marketplace) which combines respondents from multiple nonprobability sample sources, and a blend combining respondents from a single nonprobability panel with respondents from three sample aggregators. All three nonprobability samples used a common set of quotas on age by gender, race and ethnicity, and educational attainment. They also removed respondents that did not pass vendor-applied data quality checks including speeding, straightlining, and duplicate cases. Additionally, Pew applied post-stratification weights based on 11 variables or combinations of variables. Data were then compared to 28 different population benchmarks on topics such as military service, home ownership, citizenship, receipt of government benefits, marital status, vote choice, health conditions, and retirement account status. The population benchmarks came from the American Community Survey, Centers for Disease Control, Current Population Survey, 2020 Presidential Election, National Health and Nutrition Examination Survey, National Health Interview Survey, and Survey of Income and Program Participation.

How we determined Verasight was the most accurate

Between September 12 and 20, 2023, we interviewed 4,047 Verasight panelists (the average sample size in the Pew study was 4,990). Our survey included 25 of the 28 benchmarks in the Pew study. We compared responses in the Verasight survey to the benchmark values used in the Pew study. We did not ask Covid-19 vaccination status, whether work had been affected by Covid-19, or respondent job status the previous week, because responses to these three questions in 2023, when we conducted our survey, would not be comparable to the benchmarks analyzed by the Pew study from 2021. We also omitted any variables used to generate our post stratification weights from the analysis.

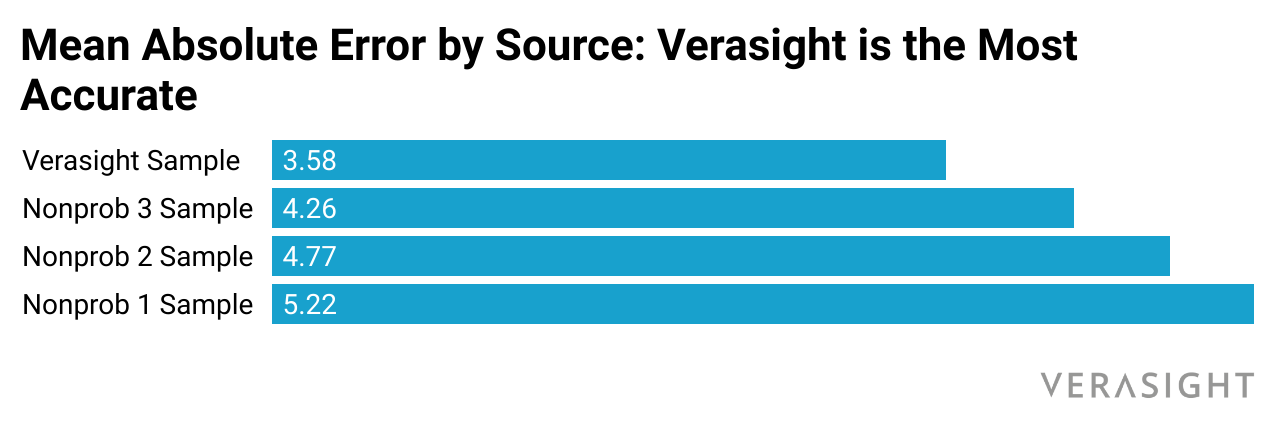

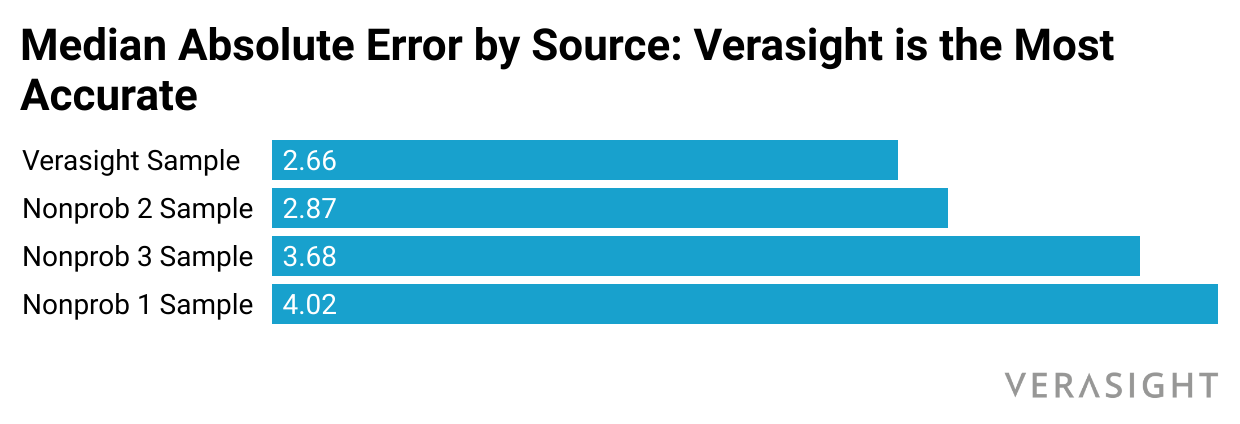

To evaluate error in the Verasight survey and the nonprobability surveys in the Pew study, we subtracted the population benchmark value from the weighted survey value for each response category. We then calculated the mean and median absolute error across these differences. Figures 1 and 2 show that Verasight was the most accurate on both these metrics.

We are especially proud of our accuracy because we are able to deliver this data quality (plus additional services such as post-stratification weights, cleaned and labeled data, and full methodological documentation) for equal to or less than comparable online sample providers. To make our data even more accessible, we also regularly offer free survey questions to students, early career professionals, and nonprofits, to attendees at professional conferences, and we archive nonproprietary Verasight data with the Roper Center for Public Opinion Research for others to use.

This was a “best case” test for the other samples

Verasight’s accuracy is even more impressive when we consider that this was a “best case” test for the other samples in the study. First, the Verasight sample was almost 20% smaller than the Pew study samples (4,047 versus an average sample size of 4,990) and Verasight’s 8 day field period was shorter than any of the Pew studies, which ranged from 10 days to 22 days in the field. Second, the nonprobability samples in the study all used a quota-based sampling strategy to ensure their samples were balanced on Age X Gender, Race/Ethnicity, and Education. Verasight did not use sample quotas, which suggests the Verasight panel is more representative of the general population than the nonprobability panels in the Pew study.

“Verasight was able to provide the most accurate nonprobability data with fewer respondents, a faster field period, and a simpler weighting procedure.”

Third, we opted for a relatively simple weighting strategy, weighting with no interactions and just 10 variables: sex, age, education, race/Hispanic ethnicity, Census region, metropolitan status, income, partisanship, smoking behavior, and home type. This contrasts with the Pew study, which weighted on Age X Gender, Education X Gender, Education X Age, Race/Ethnicity X Education, Born inside vs. outside the U.S. among Hispanics and Asian Americans, Years lived in the U.S., Census region X Metro/Non-metro, Volunteerism, Voter registration, Party affiliation, Frequency of Internet use, and Religious affiliation (this weighting was in addition to the quota sample described above). We could not weight on all of these variables because we did not ask all of them in our survey, but the fact that Verasight results were the most accurate with a much simpler weighting approach offers further validation of the Verasight data. In sum, Verasight was able to provide the most accurate nonprobability data with fewer respondents, a faster field period, and a simpler weighting procedure.

Why Verasight Data Lead the Industry in Terms of Accuracy

Several factors contribute to the accuracy of Verasight survey data. First, Verasight uses a variety of recruitment methods to ensure a representative panel. In addition to online targeting, Verasight uses probability sampling, including random address-based sampling and random person-to-person texting, to invite panelists. This multi-method approach improves accuracy, while also keeping Verasight prices comparable to (or less expensive than) nonprobability vendors. Second, Verasight panelists are verified via multi-step authentication, including providing an SMS response from a mobile phone registered with a major U.S. carrier (e.g., no VOIP or internet phones). Third, Verasight fairly compensates respondents for every survey taken (even if they end up being screened out), allows respondents to choose how they are compensated (Venmo, PayPal, gift card, pre-paid Visa card, or charitable donation), and never routes respondents from survey-to-survey. Our “Excellent” rating on a reputable online reviews website shows how much Verasight panelists appreciate taking our surveys and being part of the Verasight community.

Verasight is also an industry leader in data transparency. Verasight is a member of the American Association for Public Opinion Research Transparency Initiative and Verasight regularly archives nonproprietary survey data with the Roper Center for Public Opinion Research, where Verasight has received a 10/10 “Greatly Exceeds Requirements” Roper Transparency Score.

The Verasight Panel Also Compares Extremely Favorably to Probability Samples

While the nonprobability data analyzed above are most analogous to the Verasight panel in cost and sample type, the Pew study also included three probability samples. When we analyzed the probability samples in the Pew study, Verasight did extremely well in comparison to these samples.

Given the cost differences between probability and nonprobability survey data, Verasight’s relative accuracy might be especially surprising. Although it is impossible to know how much the Pew Research Center paid for the probability samples, NORC at the University of Chicago is a well-known probability sample provider that can be used to understand price differences between probability samples and Verasight data. Pricing for questions on NORC’s on omnibus survey start at $3,000 for three questions. A 50-question Verasight survey for academic or nonprofit researchers costs just $2,000 more than 3 omnibus questions at NORC. In other words, probability surveys often cost 10 times Verasight panel pricing.

Despite these massive price differences, the Verasight panel does extremely well when compared to the probability samples in the Pew study. Across all response categories, Verasight was more accurate than at least one probability sample more than 50 percent of the time. Furthermore, Verasight averaged within 1.5 percentage points from the probability samples; median absolute difference was 1.21 percentage points and mean absolute difference was 1.47 percentage points. Additionally, as discussed above, the smaller sample size, shorter fielding period, and more parsimonious weighting approach mean this was a “best case” for the other samples. In a true head-to-head comparison we would expect Verasight to do even better. Indeed, Verasight’s 2020 Election Polling was the most accurate (Verasight was previously named Reality Check Insights).

Even with this level of accuracy from the Verasight panel, some research needs require probability samples. When this is the case, Verasight regularly works with clients to provide random address-based samples and/or random person-to-person text samples. We also create custom sampling frames, to ensure clients obtain representative samples of specific groups of interest, such as small business owners, policy leaders, or likely primary voters. Regardless of research needs, Verasight prioritizes methodological rigor, accuracy, transparency, respect for survey respondents, and cost-effectiveness.

Suggested Citation:

Enns, Peter K., Amelia Goranson, Jake Rothschild, and Gretchen Streett. 2023. “We Replicated Pew Research Center’s Recent Benchmarking Study.” Verasight White Paper Series. November 15, 2023. https://surveys.verasight.io/pew-replication.

Author Bios:

Peter K. Enns is Co-founder and Chief Data Scientist at Verasight. He is also a professor in the Department of Government and in the Brooks School of Public Policy at Cornell University. He has published three books, dozens of academic articles (including the most accurate 2020 US presidential election forecast), and he is PI of the NSF-funded Collaborative Midterm Survey.

Amelia Goranson is a Data Scientist at Verasight. She has a PhD in Social Psychology from the University of North Carolina at Chapel Hill where she specialized in quantitative methods.

Jake Rothschild is a Senior Data Scientist at Verasight. He has a PhD in Political Science from Northwestern University where he specialized in quantitative methods. He is the co-author of the book The Partisan Next Door.

Gretchen Streett is a Data Scientist at Verasight. She has a MA in Quantitative Methods from Columbia University and previously worked at NORC at the University of Chicago.

Additional Survey Methodology Details: Verasight collected data for this survey from September 12 - September 20, 2023. The sampling criteria for this survey were: U.S. adult (age 18+). The selection criteria for the final sample were: Passed all data quality assurance checks, outlined below.

The Verasight data are weighted to match US Census data on age, race/ethnicity, sex, income, education, region, home type, and metropolitan status, NHIS benchmarks of smoking history, and population benchmarks of partisanship. The margin of sampling error, which accounts for the design effect and is calculated using the classical random sampling formula, is +/- 2.3%.

All respondents were recruited from the Verasight Community, which is composed of individuals recruited via random address-based sampling, random person-to-person text messaging, and dynamic online targeting. All Verasight community members are verified via multi-step authentication, including providing an SMS response from a mobile phone registered with a major U.S. carrier (e.g., no VOIP or internet phones) as well as within-survey technology, including verifying the absence of non-human responses with technologies such as Google reCAPTCHA v3. Those who exhibit low-quality response behaviors over time, such as straight-lining or speeding, are also removed and prohibited from further participation in the community. Verasight Community members receive points for taking surveys that can be redeemed for Venmo or PayPal payments, gift cards, or charitable donations. Respondents are never routed from one survey to another and receive compensation for every invited survey, so there is never an incentive to respond strategically to survey qualification screener questions. To further ensure data quality, the Verasight data team implements a number of post-data collection quality assurance procedures, including confirming that all responses correspond with U.S. IP addresses, confirming no duplicate respondents, verifying the absence of non-human responses, and removing any respondents who failed in-survey attention, speeding, and/or straight-lining checks. Unmeasured error in this or any other survey may exist. Verasight is a member of the American Association for Public Opinion Research Transparency Initiative.

.jpeg)

.png)